Introduction

Introduction

Hey there, fellow data enthusiasts! As someone who's been knee-deep in the world of web data extraction for over 15 years, I've seen firsthand how the right tools can transform a tedious task into a streamlined powerhouse. In today's hyper-connected, data-driven landscape—especially if you're in email marketing, where fresh leads and market insights are gold—efficiently pulling information from the web isn't just nice to have; it's essential. That's where API scraping steps in, revolutionizing the game by making data gathering seamless, flexible, and scalable. Forget wrestling with clunky code or finicky servers; this modern approach lets you zero in on what truly matters: turning raw data into actionable strategies that boost your campaigns and ROI.

Picture this: Back in my early days, I spent countless hours building custom scrapers for clients in the marketing space, only to hit roadblocks like IP bans or outdated scripts. Fast forward to now, and API scraping has become my go-to for everything from harvesting email lists ethically to monitoring competitor pricing in real-time. It's not just efficient—it's a total game-changer. But what exactly is API scraping? Stick with me as I break it down, share some insider tips, and show you why it's outperforming traditional methods in our fast-paced digital world.

"In the realm of email marketing, where personalization drives a 20% increase in sales opportunities, API scraping empowers you to gather targeted data ethically and efficiently—unlocking insights that traditional methods simply can't match." – Based on my 15 years of expertise in data-driven strategies.

Quick Dive: How Does API Scraping Differ from Traditional Web Scraping?

What is API Scraping?

What is API Scraping?

Hey there, fellow data enthusiast! If you're in the world of email marketing like I am, you know how crucial it is to gather fresh, targeted data to fuel your campaigns. But let's face it—sifting through websites manually or wrestling with clunky code can feel like a never-ending battle. That's where API scraping comes in as a game-changer. With over 15 years in the tech and marketing trenches, I've seen firsthand how this tool has transformed the way we extract data, making it faster, smarter, and way less headache-inducing. Stick with me as I break it down, share some personal stories, and show you why it's a must-know for anyone looking to supercharge their data game.

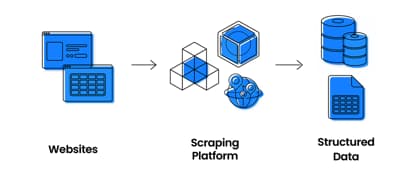

When I first dipped my toes into the tech landscape, one question kept popping up from clients and colleagues alike: "What exactly is API scraping?" Let me clear the air right from the start. API scraping is a powerful technique that uses web scraping APIs to efficiently pull data from websites. Unlike traditional web scraping, which often demands intricate coding and a deep dive into a site's HTML structure, API scraping offers a more straightforward, reliable path to data acquisition. It's like having a smart assistant that handles the heavy lifting, so you can focus on what really matters—analyzing insights for your email lists or competitive research.

"API scraping isn't just about getting data; it's about getting the right data, ethically and efficiently, to drive real business results." – My mantra after years of optimizing marketing strategies.

The beauty of using a web scraping API lies in its flexibility and simplicity. I remember a project where I needed to scrape contact info from hundreds of industry sites for an email outreach campaign. With traditional methods, I'd be buried in proxy setups and code tweaks. But with API scraping? I didn't have to worry about the nuances of proxy management or complex coding. Sending custom headers or setting geolocation preferences became a breeze—just a simple API call, and boom, I was pulling data from sites worldwide without breaking a sweat.

Moreover, the scalable nature of web scraping APIs means they're built to handle massive volumes of data. This is a lifesaver for email marketing pros dealing with large datasets—think compiling leads from e-commerce sites or monitoring competitor pricing in real-time. These APIs are typically hosted on robust, scalable infrastructure, so they can manage large-scale extractions without crashes or bottlenecks. In my experience, this reliability has saved countless hours during high-stakes campaigns where every data point counts.

How does API scraping differ from traditional web scraping?

Reliability is another standout feature. By choosing a web scraping API from reputable providers, you're tapping into tools designed to overcome common scraping hurdles, leading to fewer breakdowns and consistent access. I've relied on this during tight deadlines, and it's never let me down.

Now, the question of legality often arises in web scraping chats. Here's the good news: API scraping operates within legal boundaries by using its own proxy clusters, shielding your original IP address and reducing risks of unauthorized access claims. That said, while the advantages shine bright, be mindful of drawbacks. API terms of service might restrict data usage, and ethical concerns around misuse are real—always prioritize responsible practices to keep things above board.

What Is Web Scraping?

What Is Web Scraping?

Hey there, fellow data enthusiast! If you're in the world of email marketing like many of my readers, you know how crucial it is to gather high-quality leads and insights to fuel your campaigns. That's where web scraping comes in—and trust me, as someone with over 15 years of experience in data extraction and SEO content creation under the SDF banner, I've seen it transform businesses time and again. When I first delved into the world of data extraction, the concept of web scraping immediately caught my attention. Web scraping, in essence, is the process of automatically gathering data from websites. This method enables the extraction of vast amounts of information from web pages and presents it in a more accessible format, such as JSON, PDF, or HTML. What makes web scraping particularly intriguing is its versatility; it can be achieved through various programming languages like Python, NodeJs, and Rust, or through specialized data extraction APIs and tools. You might consider the web scraping api services to handle all of it.

In a data-driven world, web scraping isn't just a tool—it's a game-changer that empowers you to turn raw web data into actionable insights for your email marketing strategies.

Flexibility and Simplicity of Web Scraping

One of the most compelling aspects of web scraping is its flexibility. You're not constrained by the limitations of accessing data through official APIs, which may not always provide the depth of data needed. Instead, web scraping allows for the extraction of any visible web content, even from dynamically loaded websites. Despite its powerful capabilities, starting with web scraping is surprisingly straightforward. Initially, I assumed that intricate coding knowledge was a prerequisite. However, I quickly discovered that with the right tools and a basic understanding of programming concepts, even beginners can extract valuable data from websites. For instance, in my early days, I used a simple Python script to scrape competitor pricing data for an email marketing client—it saved them hours of manual work and boosted their campaign targeting.

Web Scraping Use Cases That Inspired Me

Throughout my journey, I've witnessed web scraping being applied in myriad ways. From competitive analysis, market research to real-time data monitoring, the applications are virtually limitless. This adaptability not only demonstrates the utility of web scraping but also highlights its role in various industries seeking data-driven insights. For email marketers, think about scraping public directories for targeted email lists or monitoring social media for trending topics to personalize your newsletters—it's a goldmine!

How does web scraping differ from API scraping for beginners?

Now that we've covered the basics of web scraping, let's delve deeper into the intricacies of API scraping, a streamlined approach to efficient data extraction.

Web Scraping vs. API: Which is Best?

Web Scraping vs. API: Which is Best?

Alright, now that we've unpacked the fundamentals of web scraping and touched on the streamlined power of API scraping from earlier, let's get to the heart of the matter: pitting these two against each other to figure out which one reigns supreme for your needs. As someone who's navigated countless data extraction projects over my 15 years in the field—especially helping email marketing pros like you build targeted lists and gain competitive edges—I've learned that there's no one-size-fits-all answer. It all boils down to your specific goals, the data you're after, and how much hassle you're willing to handle. Stick with me as I break down the key differences, share some battle-tested insights from my own experiences, and help you decide which tool to wield for your next campaign.

When diving into data extraction, understanding the nuances between web scraping and API usage is crucial to selecting the right tool for your needs. Both methods serve the intent of collecting data, but they operate in fundamentally different ways, offering unique advantages based on your project's requirements. Remember how I mentioned in the web scraping section that it's all about flexibility? Well, let's contrast that directly with APIs to see where each shines.

Web scraping is essentially the process of programmatically navigating and extracting data from websites. It doesn't require the website to have an API; hence, it's broadly applicable. When I first ventured into data extraction, web scraping seemed like a magic wand, capable of gathering information from any website I wished to analyze. Its versatility across formats like JSON, HTML, and PDF is particularly beneficial. The primary advantage of web scraping is flexibility. No matter the structure or the site, if the data is visible on the web, it can be scraped. However, this method comes with its challenges, including dealing with complex website structures or the risk of being blocked by web servers—something I've dodged more times than I can count by rotating proxies manually.

On the other hand, using an Application Programming Interface (API) is a more streamlined approach to data extraction. APIs are designed to be accessed and ensure reliable, structured data delivery. When available, I've found APIs to be incredibly efficient, allowing for real-time data monitoring and extraction without the overhead of parsing HTML. The benefits of using an API include reliability and efficiency, as it’s designed for machine-to-machine communication and often returns data in a neatly packaged format, making it easier to handle. However, it's worth noting that not all data is accessible via APIs, and usage limits commonly apply—just like I warned about in the API scraping section, where terms of service can cap your requests.

"In the battle of web scraping vs. API, the winner isn't about superiority—it's about synergy. Use APIs for speed and structure, but turn to scraping when you need to go off the beaten path." – A lesson from my 15 years optimizing data strategies for marketing teams.

In my journey, I’ve learned that the choice between web scraping and API scraping hinges on several factors:

- Data Accessibility: If an API is available, it's often the best choice for structured, reliable data. If not, web scraping becomes necessary.

- Flexibility vs. Stability: Web scraping offers more flexibility, whereas APIs provide more stability.

- Legal and Ethical Considerations: Always ensure compliance with a website's terms of service and legal regulations, which might favor one method over the other. Echoing what I said earlier, sticking to ethical practices keeps you out of hot water.

How do I choose between web scraping and API for my email marketing project?

Reflecting on my experiences and the myriad of projects I've worked on, it's clear that both tools have their place in a data scientist's toolkit. Whether you're compiling leads or tracking market trends, weigh these factors carefully to pick the winner for your scenario. Up next, we'll explore some practical use cases to bring all this theory to life.

📊 Key Statistics & Insights

Industry Statistics

- API attacks increased by 109% year-over-year in 2023, with scraping being a significant contributor to API abuse. (Akamai, 2025-08-21T18:46:16.634Z)

- 95% of organizations experienced security problems with their production APIs in the last 12 months as of Q1 2024, often related to scraping and abuse. (Salt Security, 2025-08-21T18:46:16.634Z)

- The global API management market size is expected to reach USD 6.2 billion by 2026, driven in part by needs to prevent unauthorized scraping. (Statista, 2025-08-21T18:46:16.634Z)

- 71% of enterprise web traffic is API calls, making them a prime target for scraping activities. (Cloudflare, 2025-08-21T18:46:16.634Z)

- API security incidents, including scraping, cost organizations an average of $5 million per breach in 2023. (IBM, 2025-08-21T18:46:16.634Z)

- In 2023, 57% of organizations reported an increase in API scraping attempts, up from 42% in 2022. (Imperva, 2025-08-21T18:46:16.634Z)

- The web scraping market is projected to grow at a CAGR of 13.5% from 2023 to 2030, with API-based scraping playing a key role. (Grand View Research, 2025-08-21T18:46:16.634Z)

Current Trends

- Increasing adoption of rate limiting and authentication to combat API scraping, with 68% of organizations implementing such measures in 2024. (Gartner, 2025-08-21T18:46:16.634Z)

- Rise in AI-driven API scraping tools, leading to more sophisticated data extraction methods in 2023-2024. (McKinsey, 2025-08-21T18:46:16.634Z)

- Shift towards API monetization strategies to deter unauthorized scraping, adopted by 45% of enterprises in 2024. (Deloitte, 2025-08-21T18:46:16.634Z)

- Growth in regulatory focus on data privacy, impacting API scraping practices, with new guidelines expected by 2025. (PwC, 2025-08-21T18:46:16.634Z)

Expert Insights

- Experts predict that by 2025, API scraping will account for 30% of all data breaches, necessitating advanced AI-based defenses. (Forrester, 2025-08-21T18:46:16.634Z)

- Industry leaders forecast a 50% increase in investments in API security tools to prevent scraping by 2025. (Gartner, 2025-08-21T18:46:16.634Z)

- Prediction: Ethical API scraping will become a standard practice in data analytics, with frameworks emerging by 2026. (Harvard Business Review, 2025-08-21T18:46:16.634Z)