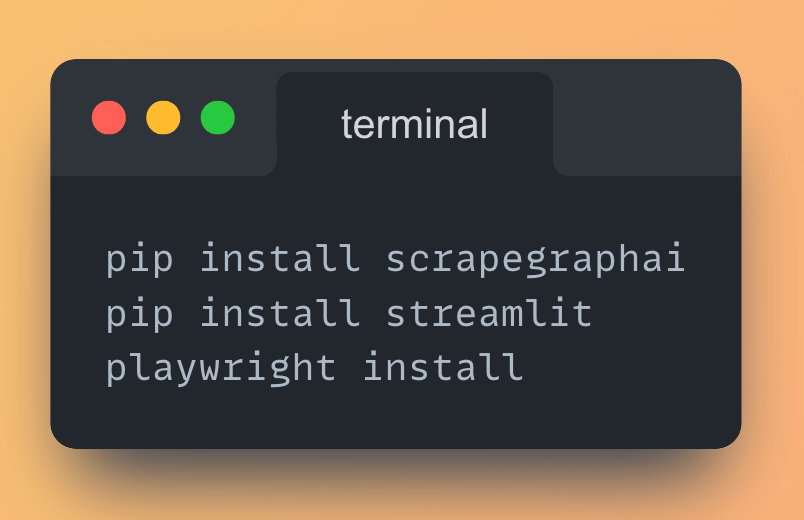

1. Install the necessary Python Libraries Run the following command from your terminal

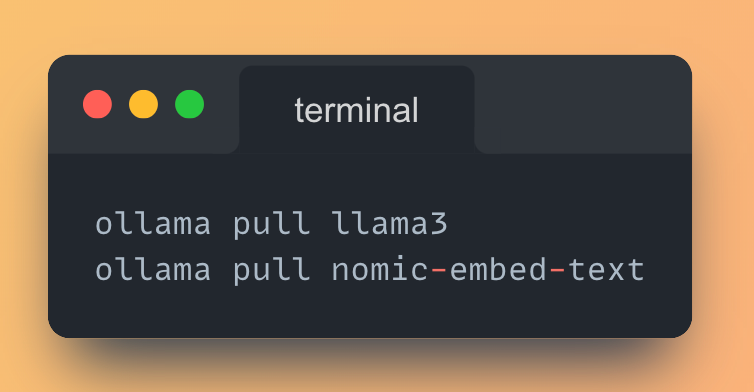

2. Download @ollama and pull the following models: • Llama-3 as the main LLM • nomic-embed-text as the embedding model

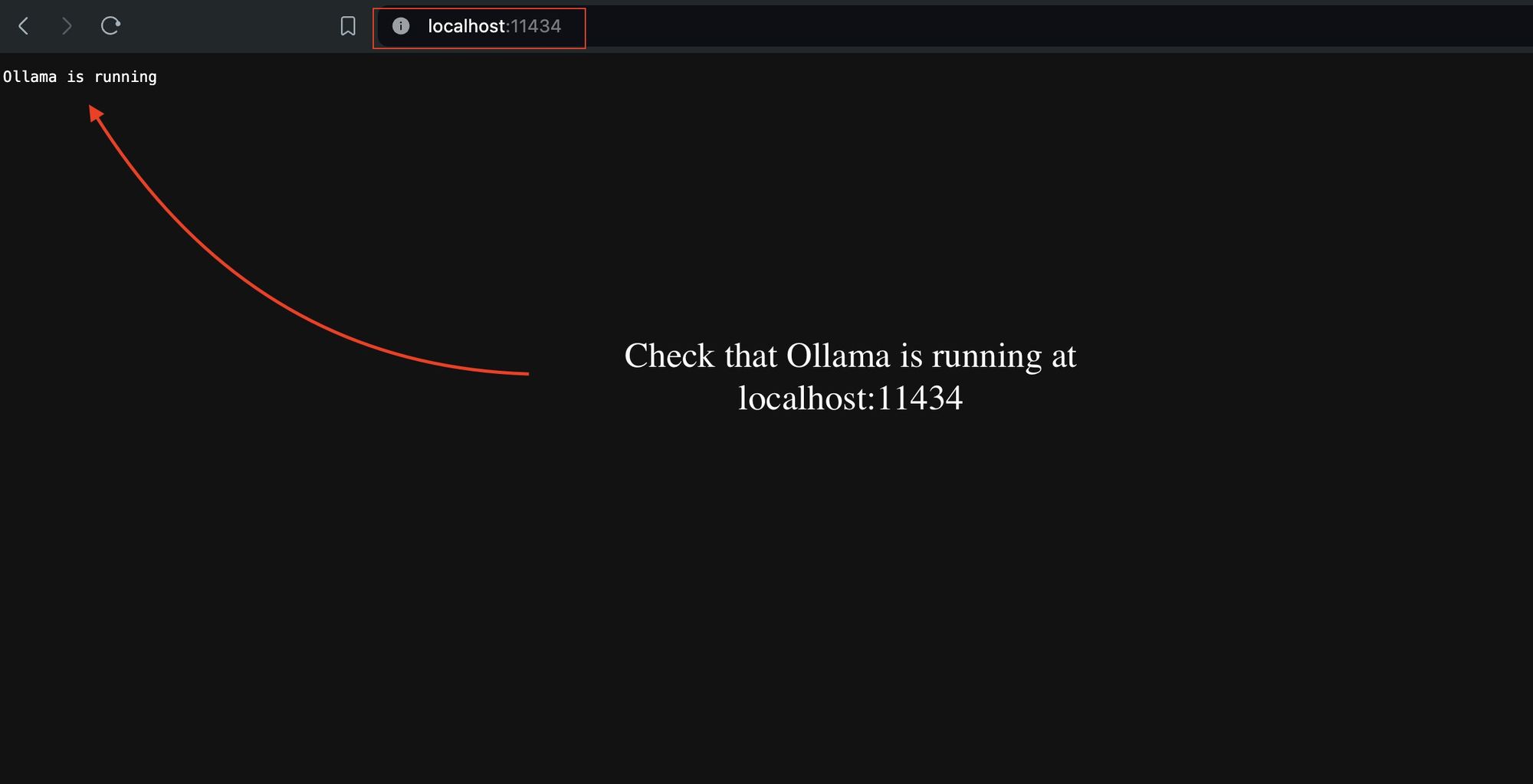

3. Check that Ollama is running at localhost port 11434. If not you can try serving the model with the command: ollama serve <model_name>

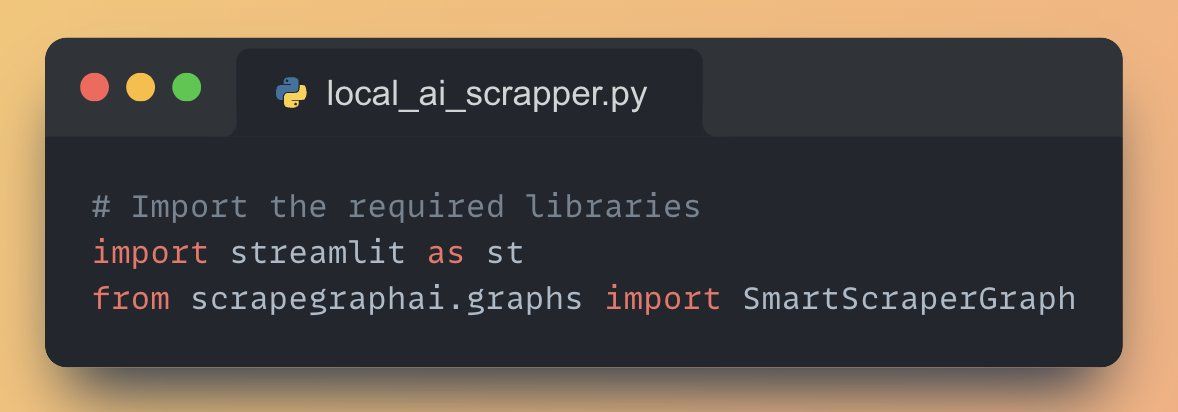

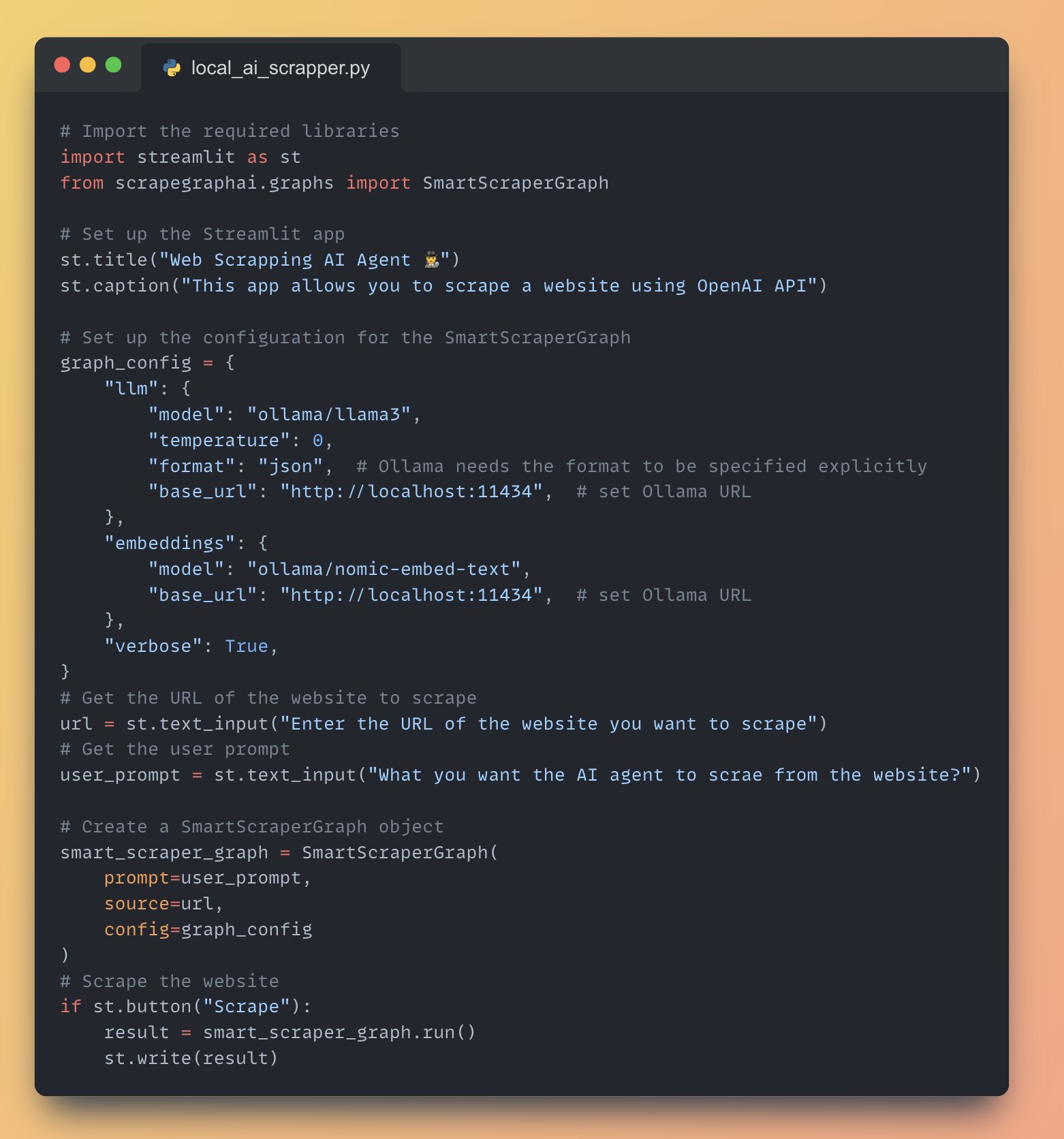

4. Import necessary libraries • Streamlit for building the web app • Scrapegraph AI for creating scrapping pipelines with LLMs

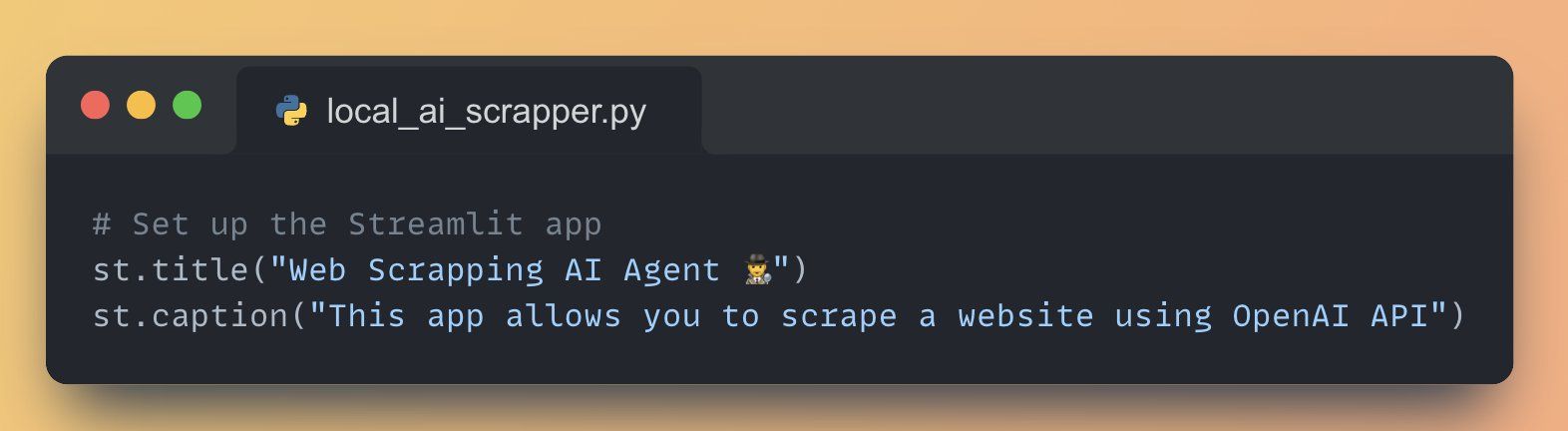

5. Set up the Streamlit App Streamlit lets you create user interface with just python code, for this app we will:

Add a title to the app using 'st.title()'

Create a text input box for the user to enter their OpenAI API key using 'st.text_input()'

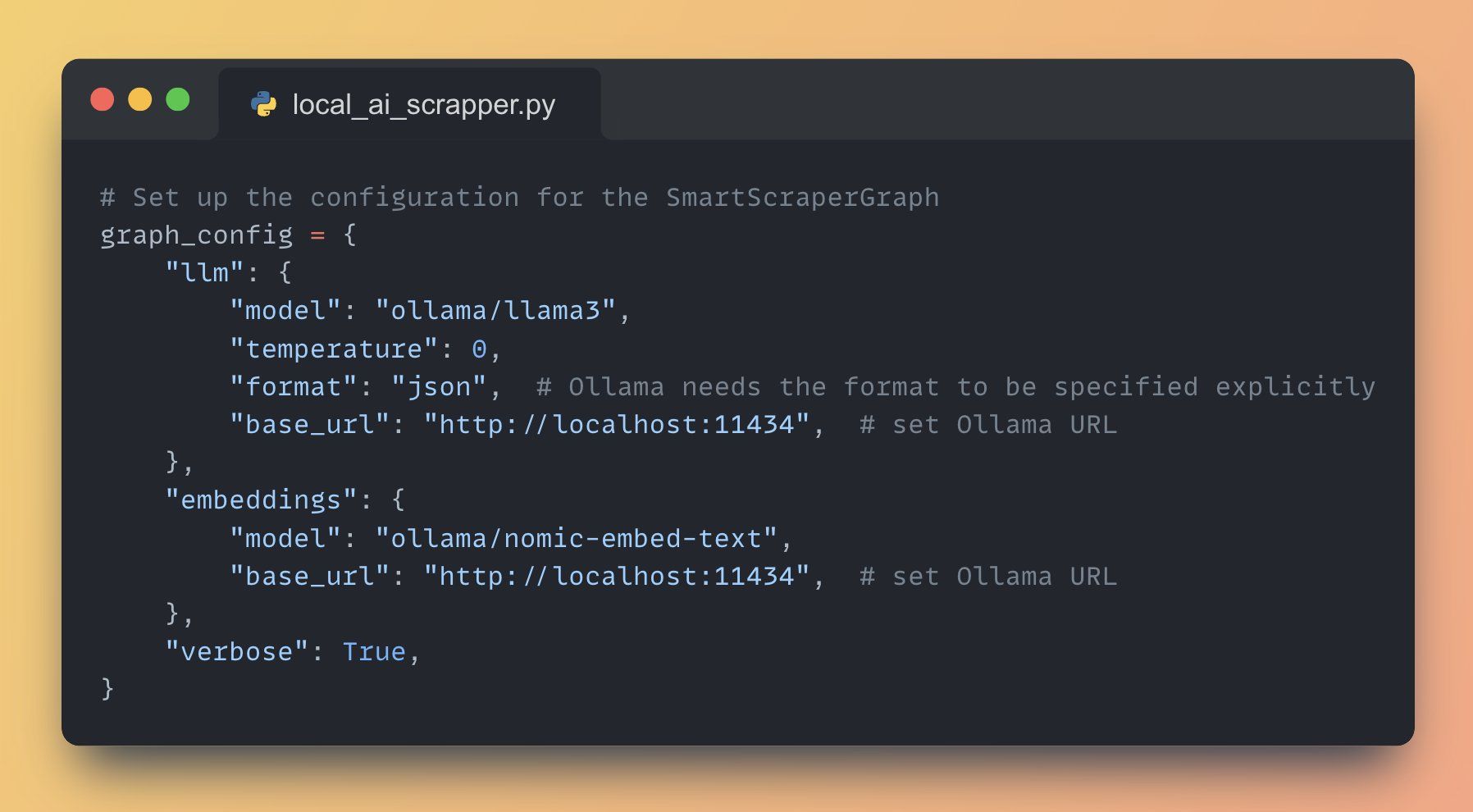

6. Configure the SmartScraperGraph • Set the LLM as 'ollama/llama3' served locally and output format as json. • Set the embedding model as 'ollama/nomic-embed-text'

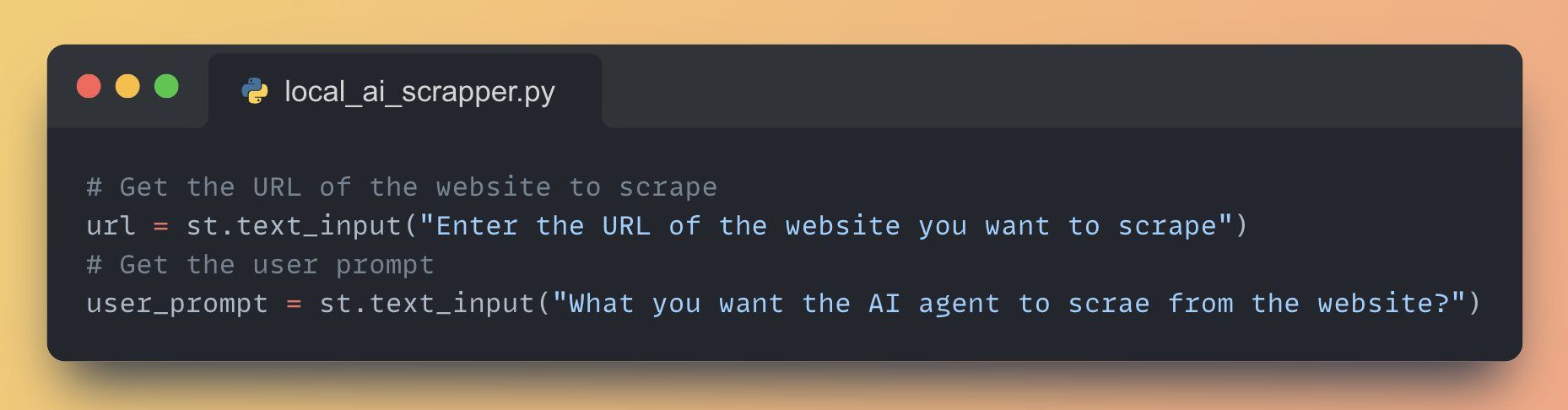

7. Get the website URL and user prompt •

Use 'st.text_input()' to get the URL of the website to scrape

Use 'st.text_input()' to get the user prompt specifying what to scrape from the website

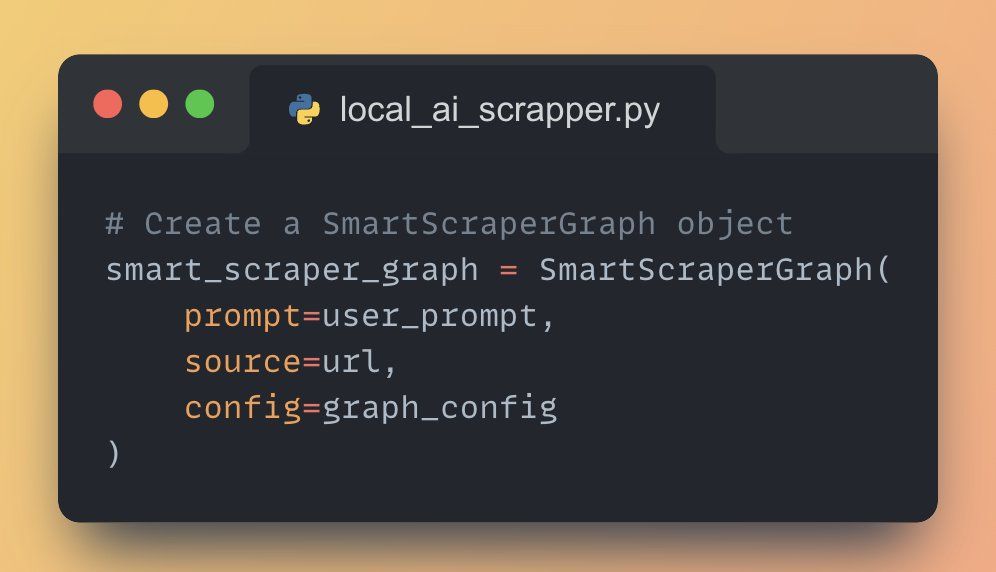

8. Initialize the SmartScraperGraph • Create an instance of SmartScraperGraph with the user prompt, website URL, and graph configuration

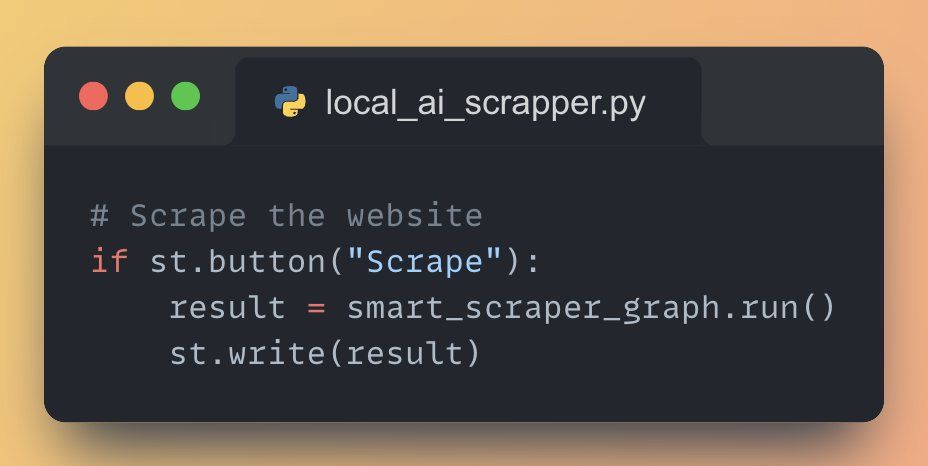

9. Scrape the website and display the result

Add a "Scrape" button using 'st.button()'

When the button is clicked, run the SmartScraperGraph and display the result using 'st.write()'

Full Application Code running Webs Scrapper AI agent with local Llama-3 using Ollama

Working Application demo using Streamlit Paste the above code in vscode or pycharm and run the following command: 'streamlit run local_ai_scrapper.py'

Thats all!

FAQ

What Python libraries are necessary for this process?

You will need to install several Python libraries, including Streamlit for building the web app and Scrapegraph AI for creating scrapping pipelines with LLMs.

What models do I need to download?

You need to download Llama-3 as the main LLM and nomic-embed-text as the embedding model from @ollama.

How do I check if Ollama is running correctly?

Ollama should be running at localhost port 11434. If not, you can try serving the model with the command: ollama serve <model_name>.

How do I set up the Streamlit App?

Streamlit lets you create a user interface with just python code. For this app, you will need to add a title and create a text input box for the user to enter their OpenAI API key.

What is the SmartScraperGraph configuration?

You need to set the LLM as 'ollama/llama3' served locally and output format as json. The embedding model should be set as 'ollama/nomic-embed-text'.

How do I get the website URL and user prompt?

You can use 'st.text_input()' to get the URL of the website to scrape and to get the user prompt specifying what to scrape from the website.

How do I initialize the SmartScraperGraph?

You can create an instance of SmartScraperGraph with the user prompt, website URL, and graph configuration.

How do I scrape the website and display the result?

You can add a "Scrape" button using 'st.button()'. When the button is clicked, run the SmartScraperGraph and display the result using 'st.write()'.

How do I run the full application code?

You can paste the provided code in vscode or pycharm and run the following command: 'streamlit run local_ai_scrapper.py'.