When delving into the realm of web scraping, understanding the nuances between Scrapy and Selenium becomes pivotal. These two frameworks stand out as robust tools in the web scraping toolkit, each with its unique strengths and applications. To navigate this landscape effectively, it's crucial to grasp the essence of Selenium and Scrapy, and to discern the key distinctions that set them apart.

Let's embark on a journey to explore these powerful technologies and shed light on when to opt for Selenium, when to leverage Scrapy, and how the choice between them can significantly impact your web scraping endeavors.

Selenium

Selenium is a powerful web testing framework originally designed for automating browser interactions in the realm of software testing. However, its versatility extends beyond testing, making it a valuable tool for web scraping as well.

What is Selenium?

Selenium is an open-source framework that provides a suite of tools for automating web browsers. It supports multiple programming languages, including Python, Java, and C#, making it accessible to a wide range of developers. Selenium WebDriver, a key component, allows users to control browser actions programmatically, enabling tasks like form filling, clicking buttons, and navigating through web pages.

1. Dynamic Content Handling: Selenium excels at handling dynamic content generated by JavaScript. Its ability to interact with the DOM (Document Object Model) in real-time makes it suitable for scraping data from modern, interactive websites.

2. Wide Browser Support: Selenium supports various browsers, including Chrome, Firefox, Safari, and Edge. This flexibility allows developers to choose the browser that best fits their scraping needs.

3. User Emulation: Selenium can emulate user behavior, navigating through pages and interacting with elements just like a human user would. This is advantageous for scraping scenarios where user interaction is necessary.

1. Resource Intensive: Selenium operates by launching a full browser, which can be resource-intensive. This makes it slower compared to more lightweight scraping alternatives, especially when dealing with a large number of requests.

2. Steep Learning Curve: Due to its extensive capabilities, mastering Selenium might require a steeper learning curve for beginners. Understanding the intricacies of browser automation and DOM manipulation can be challenging.

3. Detection Challenges: Websites have become adept at detecting automated bot activities, and Selenium is not immune to detection. Some websites employ measures to identify and block Selenium-driven scrapers, requiring additional efforts to circumvent detection mechanisms.

Scrapy

Scrapy stands as a high-level open-source web crawling and web scraping framework for Python. Unlike Selenium, which focuses on browser automation, Scrapy is specifically designed for efficiently extracting data from websites by following the principles of spiders and pipelines.

What is Scrapy?

Scrapy is a Python framework built for web crawling and scraping tasks. It provides a structured way to define how to navigate websites, which elements to extract, and how to store the scraped data. The framework adopts a modular architecture, employing spiders to define the crawling logic and pipelines to process and store the extracted information.

- Efficiency and Speed: Scrapy is renowned for its efficiency and speed. It operates asynchronously, enabling the concurrent execution of multiple requests. This makes it particularly effective for large-scale scraping tasks where speed is essential.

- Structured Data Extraction: Scrapy simplifies the process of data extraction through its built-in mechanisms. Selectors and item pipelines help organize and process data, providing a structured approach to scraping.

- Extensibility: Scrapy is highly extensible and customizable. Developers can adapt and extend its functionality to suit specific scraping requirements, making it a versatile choice for diverse web scraping projects.

- JavaScript Rendering: Scrapy may face challenges when dealing with websites heavily reliant on JavaScript for content rendering. While it can handle some JavaScript-based interactivity, it may fall short in scenarios where dynamic content requires browser-level interaction.

- Learning Curve: For newcomers to web scraping, Scrapy may pose a learning curve. Understanding its architecture, especially the concepts of spiders and pipelines, may take time for those unfamiliar with the framework.

- Limited Browser Interaction: Scrapy doesn't provide the same level of browser interaction as Selenium. If a scraping task requires simulating user behavior on dynamic websites, Scrapy might not be the most suitable choice.

Scrapy vs Selenium: An In-depth Comparison

When diving into the world of web scraping, the choice between Scrapy and Selenium can significantly impact the success of your data extraction endeavors. Each framework brings its unique strengths to the table, and understanding the nuances between them is crucial for making an informed decision.

Scraping with Selenium vs Scraping with Scrapy

| Feature | Selenium | Scrapy |

|---|---|---|

| Automation | Web browser automation | Dedicated web scraping framework |

| Interaction | Simulates user actions | Structured approach using spiders and pipelines |

| JavaScript | Handles dynamic content rendered by JavaScript | Efficient for large-scale scraping tasks |

| Suitability | Highly interactive websites | Large-scale scraping |

| Strengths | Flexibility, ability to handle complex interactions | Efficiency, scalability |

| Weaknesses | Slower than Scrapy | Requires more configuration |

| Overall | A powerful tool for dynamic scraping tasks | An efficient choice for large-scale scraping projects |

Side-by-side Comparison: Scrapy vs. Selenium

- Use Case: Selenium is ideal for scenarios where browser interaction and JavaScript execution are critical, making it suitable for dynamic and interactive websites. Scrapy, with its focus on efficiency and structured data extraction, is better suited for large-scale scraping projects where speed and organization are paramount.

- Speed and Efficiency: Scrapy's asynchronous operation allows for faster scraping, especially when dealing with a large number of requests. Selenium, while powerful, can be resource-intensive and slower due to its browser automation approach.

- Learning Curve: Selenium may have a steeper learning curve, particularly for those new to web scraping. Its extensive capabilities and browser automation concepts require a deeper understanding. Scrapy, though not without complexity, may be more approachable for beginners.

- JavaScript Handling: Selenium excels in handling JavaScript-based content rendering, while Scrapy may face challenges in scenarios where extensive JavaScript interactions are required.

In choosing between Scrapy and Selenium, the nature of the scraping task, the level of interactivity on the target websites, and the preference for speed and efficiency all play vital roles. It's a decision that hinges on the specific requirements of the project at hand.

Can Scrapy and Selenium Be Used Together? A Collaborative Approach

In certain web scraping scenarios, combining the strengths of Scrapy and Selenium can yield a powerful and flexible solution. While Scrapy is adept at efficiently navigating and extracting structured data, Selenium's prowess in handling dynamic and JavaScript-heavy content can complement Scrapy's capabilities. This collaborative approach allows for a more comprehensive scraping strategy that tackles a broader range of challenges.

How to Implement the Scrapy-Selenium Combination

Integrating Scrapy with Selenium involves leveraging the strengths of each framework to create a unified scraping solution. One common approach is to use Selenium within Scrapy's spider to handle specific pages or elements that require dynamic interaction. This can be achieved by utilizing the Selenium WebDriver in conjunction with Scrapy's spiders.

- Identify Target Pages: Determine which pages or elements within a website necessitate the use of Selenium for effective scraping. These are typically pages with dynamic content or heavy reliance on JavaScript.

- Integrate Selenium in Scrapy Spider: Within the Scrapy spider, implement Selenium to navigate and interact with the identified pages. Use the Selenium WebDriver to simulate user actions, such as clicking buttons or handling dynamic elements.

- Combine Data Extraction Methods: Scrapy's structured data extraction mechanisms, such as item pipelines and selectors, can still be employed for the majority of the scraping process. Selenium comes into play selectively for specific interactions where it excels.

- Handle Asynchronous Operations: Since Scrapy operates asynchronously, ensure that the integration with Selenium doesn't compromise the overall efficiency. Properly manage asynchronous operations to maintain the speed and scalability offered by Scrapy.

- Optimize for Performance: Fine-tune the combination to optimize performance. This may involve adjusting the frequency of Selenium-driven interactions and utilizing Scrapy's capabilities for parallel processing.

By strategically combining Scrapy and Selenium, developers can harness the strengths of both frameworks, creating a versatile and efficient web scraping solution. This collaborative approach is particularly valuable when dealing with complex websites that demand a nuanced scraping strategy.

Understanding Specific Use-Cases for Both Tools

In the realm of web scraping, the choice between Scrapy and Selenium hinges on the specific characteristics of the target websites and the nature of the scraping task at hand. Each tool brings its unique strengths, and understanding the ideal use cases for both Scrapy and Selenium is essential for making informed decisions.

For Dynamic Content - Choose Selenium

Selenium proves to be the preferred choice when dealing with dynamic web pages. Websites that heavily rely on JavaScript for content loading and rendering are best navigated with Selenium. Its ability to simulate real user interactions, such as button clicks and form submissions, ensures accurate scraping of dynamically generated content.

For Static Content - Choose Scrapy

When the target website primarily features static content without intricate JavaScript elements, Scrapy stands out as the more efficient solution. Scrapy excels at navigating and extracting data from static web pages, offering a streamlined and faster process compared to Selenium in such scenarios.

For Interactive Sites - Consider Selenium

Websites that demand user interaction, like those with complex forms or multi-step processes, call for the interactive capabilities of Selenium. Its capacity to automate browser actions in a way that mimics human behavior makes it the go-to choice for scraping data from interactive sites.

For Large-Scale Dynamic Scraping - Consider Scrapy with Splash

In situations where large-scale dynamic scraping is imperative, a combination of Scrapy with Splash proves to be a potent solution. Splash is a headless browser that integrates seamlessly with Scrapy, allowing for efficient handling of JavaScript-heavy websites. This combination enables the scalability required for scraping vast amounts of dynamic data with precision and speed.

Alternatives to Consider for Selenium and Scrapy

As the landscape of web scraping tools continues to evolve, exploring alternatives to both Selenium and Scrapy can offer additional options for developers seeking specialized solutions. While Selenium and Scrapy have established themselves as robust choices, the following tools provide alternative approaches to address specific scraping requirements.

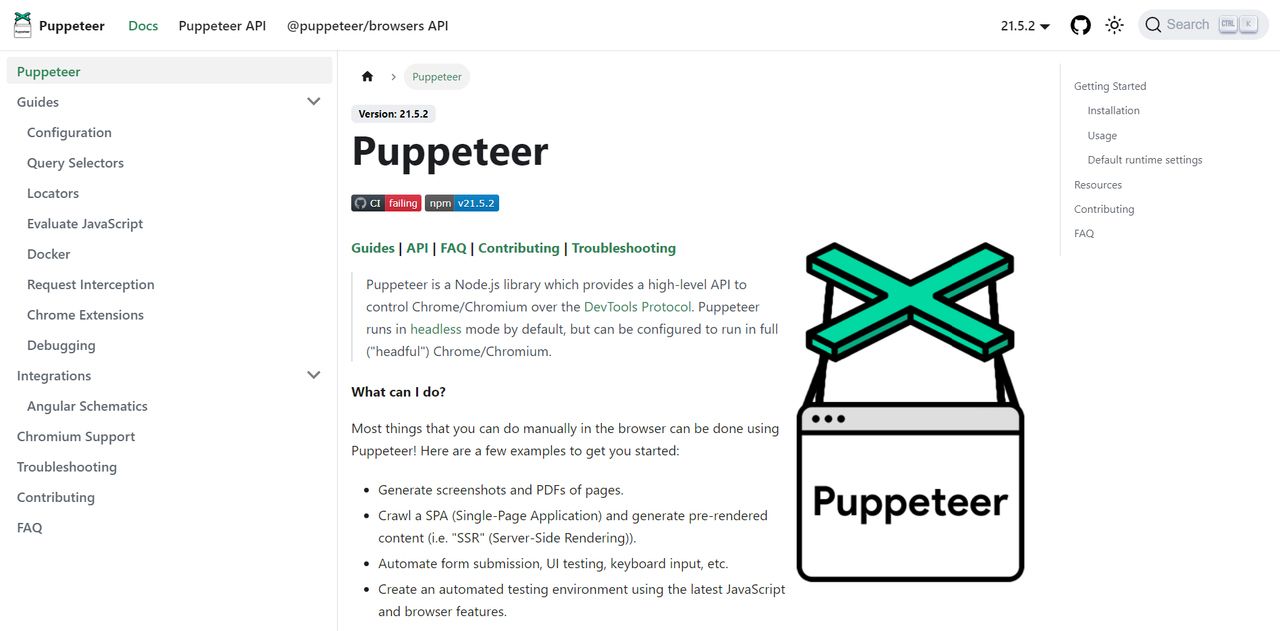

1. Puppeteer

Puppeteer, developed by Google, is a Node.js library that provides a high-level API to control headless browsers or full browsers. It excels in scenarios where browser automation and rendering are crucial. With its focus on Chrome, Puppeteer is adept at handling JavaScript-heavy websites and is particularly suitable for tasks requiring browser-level interaction.

2. Beautiful Soup

Beautiful Soup is a Python library designed for pulling data out of HTML and XML files. While it lacks the browser automation capabilities of Selenium, it shines in scenarios where the goal is to parse and extract data from static HTML content. Paired with a library like Requests for HTTP requests, Beautiful Soup offers a lightweight alternative for simple scraping tasks.

3. Pyppeteer

Inspired by Puppeteer, Pyppeteer is a Python library that provides a Pythonic interface to Puppeteer. It enables browser automation and JavaScript rendering similar to Puppeteer but within a Python environment. Pyppeteer is a suitable alternative for developers who appreciate Puppeteer's capabilities but prefer working in Python.

4. Apache Nutch

Apache Nutch is an open-source web crawling framework based on Java. It offers a scalable and extensible solution for web scraping, particularly in the context of building search engines or large-scale web crawlers. Apache Nutch's modular architecture allows developers to customize and extend its functionality based on project requirements.

Exploring alternatives to Selenium and Scrapy provides developers with a diverse toolkit, allowing them to choose tools that align more closely with the specific needs of their scraping projects. Each alternative brings its unique strengths and trade-offs, providing flexibility and adaptability in the ever-evolving field of web scraping.

Conclusion

In the dynamic landscape of web scraping, the choice between Scrapy and Selenium is nuanced and dependent on the specific requirements of the scraping task. Selenium's strength in browser automation and dynamic content handling makes it indispensable for scenarios where user interaction and JavaScript execution are critical. Conversely, Scrapy's efficiency in web crawling and structured data extraction shines in situations where a structured approach and scalability are paramount.

The decision between Scrapy and Selenium is not one-size-fits-all; it hinges on factors such as the nature of the target websites and the level of interactivity needed. For dynamic and interactive sites, Selenium stands out, while Scrapy excels in handling static content and large-scale scraping endeavors.

As developers navigate the web scraping landscape, considering alternative tools like Puppeteer, Beautiful Soup, Pyppeteer, and Apache Nutch adds further dimensions to their toolkit. Each tool brings unique features and trade-offs, allowing developers to tailor their choice to the specific demands of the project.

Ultimately, success in web scraping lies in a thoughtful selection of tools, aligning them with the project's objectives. Whether opting for Scrapy, Selenium, or exploring alternatives, a strategic approach ensures efficient and effective data extraction from the diverse web environments developers encounter.

For further reading, you might be interested in the following: